This project is a technical demo I made to display the possibilities of using Virtual Reality as a maintenance training medium.

Especially in branches like Aviation, equipment is costly and the vehicles and mechanics are often complicated. Furthermore, grounding an airplane for training purposes can result in missing out on important income it would otherwise generate flying.

By largely replacing the physical training with a virtual version, skills can be improved and new knowledge can be gathered. All without the need to keep an airplane from being actively used.

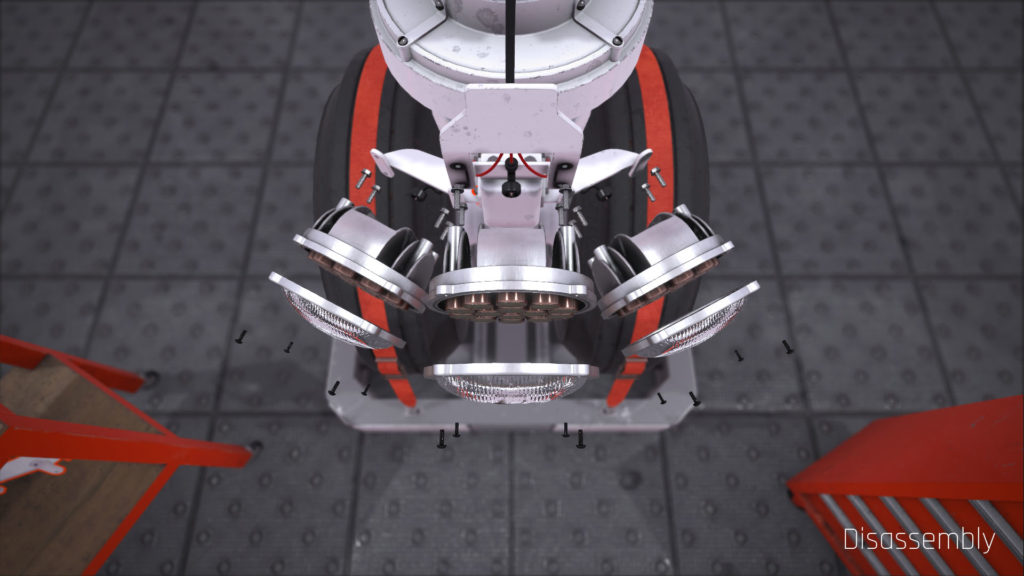

I started out by collecting data and blueprints on airplane components to see what would be a relevant item to display. By using this reference data and images I was able to recreate the nosegear from a Gulfstream G650.

Since this was going to be a mechanically focussed application, quite a few parts needed able to be disassembled. This is where my personal knowledge and skill in engineering came in very handy; I have a good understanding of how (mechanical) things work and are put together.

To have an immersive experience, I decided to make the actual tools which would be needed during the disassembly process, and programmed them to be interactable with all the parts.

// Drill use function, linear controller trigger value as input

// Switches states on trigger pull or if limit is reached

public override void Use(float _Amount){

if (_Amount > 0.1){

CheckLimit();

if (!m_Triggered){

m_Triggered = true;

for (int i = 0; i < m_Heads.Length; i++){

if (m_Heads[i].isActiveAndEnabled)

m_Heads[i].PickToggle();

}

}

if (!m_DrillAtLimit){

m_Drillstate = DrillStates.ROTATING;

Turn(_Amount);

Effects(_Amount);

}

else{

m_Drillstate = DrillStates.LIMIT;

Effects(_Amount);

}

}

else{

m_Triggered = false;

m_DrillAtLimit = false;

m_Drillstate = DrillStates.IDLE;

Effects(0);

}

}To further aid in the immersion, I designed a fitting environment in the form of a hangar, which I recreated from photo references. The environment is lightmapped and reflection probed to optimize its performance. Around the interactive area I placed light probes to help the moving parts get the same visual fidelity.

One of the benefits of a Virtual Reality environment, is the ability to add quality of life improvements to training excersises. For example, in real life you need to collect every part on a surface or in a container, and while this is good practice, if the main goal of the application is to train a certain disassembly order, we can remove this principle. In this case I solved it by implementing a so called ‘zero-gravity’ environment. Every tool or part that is dropped, will keep floating in the air, as if there was no gravity. Apart from having a very awesome effect, this helps immensely with speeding up the training process by eliminating certain principles.

I believe that no VR experience is complete without proper sound effects. Therefore I added custom impact sounds to all tools and surfaces, based on the force of the impact, and placed a reverb area throughout the environment to create an echoing effect similar to a real hangar.

// Spawn positional sound effect on impact point with velocity driven volume

// Pitch variety to eliminate monotone effects

public class ImpactAudio : MonoBehaviour

{

public AudioClip m_ImpactSound;

public float m_VolumeGain = 1.0f;

public Vector2 m_PitchRange = new Vector2(0.95f,1.05f);

private float m_Velocity;

private void OnCollisionEnter(Collision _Col){

Vector3 t_ContactPoint = _Col.contacts[0].point;

float t_Velocity = _Col.relativeVelocity.magnitude;

if (m_ImpactSound != null){

GameObject t_ColAudio = new GameObject();

t_ColAudio.name = "CollisionAudio";

t_ColAudio.transform.position = t_ContactPoint;

AudioSource t_Audio =

t_ColAudio.AddComponent<AudioSource>();

t_Audio.spatialBlend = 1.0f;

t_Audio.minDistance = 0.1f;

t_Audio.maxDistance = 1.0f;

t_Audio.pitch = Random.Range(m_PitchRange.x,

m_PitchRange.y);

t_Audio.PlayOneShot(m_ImpactSound,

t_Velocity * m_VolumeGain);

Destroy(t_CollisionAudio,

m_ImpactSound.length / t_Audio.pitch);

}

}

}To help the participant in understanding which steps are needed to be taken, an interface is needed. One of the features of VR is the ability to perceive depth, so I used that to my advantage to create a multi-layered 3D interface. It consists out of a dynamic tasklist, which alters according to the completion of a task, and a holographic representation of which step to take next. The interface can optionally be turned off, depending on the level of difficulty that is set in the application; an expert mechanic should ofcourse be able to memorize the order.